Overview

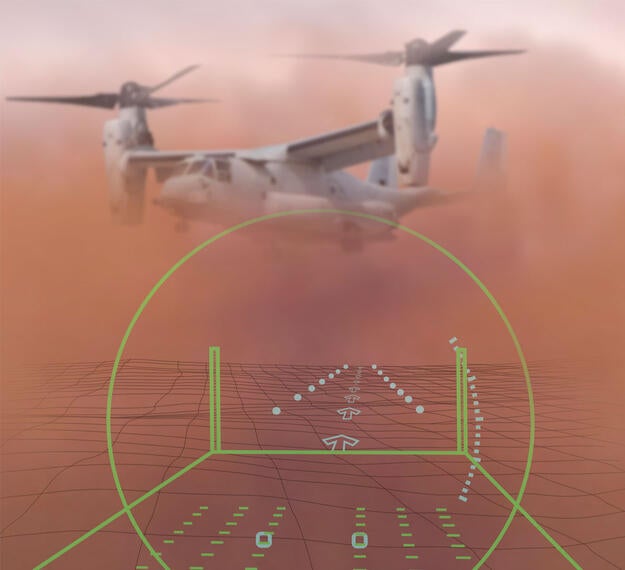

Degraded Visibility Landing System (DVLS) enhances pilots’ situational awareness by providing a low-latency, binocular helmet-mounted display (HMD) featuring augmented reality symbology including obstructions, threat data and synthetic terrain along with a high design assurance level primary flight display. Navigation data from mission planning databases, drift and flight cues and an intuitive landing zone display aid safe landings in various types of degraded environments. The open interface system allows for data fusion from various sources and sensors, allowing completely passive degraded visual environment capability (fused EO/IR and symbols) or can be integrated with active sensing technologies such as radar or LIDAR.

Automated boresighting and alignment, components with low size, weight and power and a simple fiducial sticker installation with no need for complex cockpit mapping make the system scalable to a wide variety of platforms.

Additional options include a non-HMD version that can be retrofitted into existing cockpits and displays and interface with existing sensors and components.

Features

- Low-latency architecture and software: Our architecture optimizes the speed at which data is fused and delivered to the operator, which ensures accurate placement of symbols on real-world imagery, reduces blur and improves orientation awareness for pilots

- Minimized geospatial jitter: The tight coupling of geospatial data with platform and HMD pointing information ensures symbols are accurate and locked to the underlying terrain features which improves pilot confidence and ability to land in degraded environments

- Simple design: Our DVLS is modular. With only a few hardware elements, DVLS is easy to install, integrate and use. Auto-alignment and boresighting features ease pilot workload and speed time-to-flight

- Streamlined landing zone placement: The system allows placement of preplanned and impromptu landing zones for fixed and rotary-wing aircraft

- Automated cockpit mapping and alignment: DVLS uses navigational and sensor data allowing pilots to maintain situational awareness and visual flight cues, even in brownout conditions

- Pointing accuracy < 5 mrad

- Latency < 10 msec

- Drift cues displayed at < 1kt horizontal

- Drift cues displayed at < 100 fpm vertical

- Baseline symbology based on U.S. Army Brown-Out Symbology System (BOSS) / Integrated Cueing Environment (ICE) Standard

- Automated boresighting

- Pre-integrated with U.S. DoD Long Wave IR imager and WESCAM MX™-Series gimbals from L3Harris

US Marine Corps Selects L3Harris Technologies to Help Pilots Safely Land in Degraded Visual Environments

Read ReleaseRelated Domains & Industries

Solutions that solve our customers' toughest challenges.

view all capabilities

Related News

Contact Us